如何安装项目需要的中间件环境

项目中设计到的中间件有Mysql、Redis、Kafka、Elasticsearch,本文介绍如何在本地进行安装这些中间件

安装Mysql

配置文件 my.cnf

[client]

default_character_set=utf8

[mysqld]

collation_server = utf8_general_ci

character_set_server = utf8

下载镜像

docker pull mysql:5.7

启动容器

docker run -d \

-p 3306:3306 \

--privileged=true \

-v /mysql/log:/var/log/mysql \

-v /mysql/data:/var/lib/mysql \

-v /mysql/conf:/etc/mysql/conf.d \

-e MYSQL_ROOT_PASSWORD=root \

--name mysql mysql:5.7

- -p 3306:3306 映射宿主机和容器的端口号

- --privileged=true 赋予容器几乎与主机相同的权限

- -v /mysql/log:/var/log/mysql 将Mysql的日志卷持久化到宿主机

- -v /mysql/data:/var/lib/mysql 将Mysql的数据卷持久化到宿主机

- -v /mysql/conf:/etc/mysql/conf.d 指定Mysql的配置从宿主机中读取,将第一步的my.cnf 放入宿主机/mysql/conf目录

- -e MYSQL_ROOT_PASSWORD=root设置数据库密码

- --name mysql 容器名为mysql

安装Nacos

下载镜像

为了兼容 Linux、Windows、Mac(包括 Apple Silicon)全平台,使用 --platform linux/amd64 指定镜像架构:

Linux / macOS

docker pull --platform linux/amd64 nacos/nacos-server:v2.3.0

Windows (PowerShell)

docker pull --platform linux/amd64 nacos/nacos-server:v2.3.0

启动容器

Linux / macOS

docker run -d \

--name nacos \

--platform linux/amd64 \

-e MODE=standalone \

-e NACOS_AUTH_ENABLE=true \

-e NACOS_AUTH_TOKEN=SecretKey012345678901234567890123456789012345678901234567890123456789 \

-e NACOS_AUTH_IDENTITY_KEY=serverIdentity \

-e NACOS_AUTH_IDENTITY_VALUE=security \

-p 8848:8848 \

-p 9848:9848 \

-p 9849:9849 \

nacos/nacos-server:v2.3.0

Windows (PowerShell)

docker run -d `

--name nacos `

--platform linux/amd64 `

-e MODE=standalone `

-e NACOS_AUTH_ENABLE=true `

-e NACOS_AUTH_TOKEN=SecretKey012345678901234567890123456789012345678901234567890123456789 `

-e NACOS_AUTH_IDENTITY_KEY=serverIdentity `

-e NACOS_AUTH_IDENTITY_VALUE=security `

-p 8848:8848 `

-p 9848:9848 `

-p 9849:9849 `

nacos/nacos-server:v2.3.0

- --platform linux/amd64 指定镜像平台,确保全平台兼容(Mac ARM 芯片通过 Rosetta 2 模拟运行)

- MODE=standalone 单机模式运行

- NACOS_AUTH_ENABLE=true 开启鉴权(2.x 版本建议开启)

- NACOS_AUTH_TOKEN 鉴权密钥(至少32位)

- NACOS_AUTH_IDENTITY_KEY / VALUE 服务端身份识别配置

- 8848 Web 控制台和 HTTP API 端口

- 9848 gRPC 客户端通信端口

- 9849 gRPC 服务端通信端口

验证安装

查看容器启动日志:

docker logs -f nacos

看到 Nacos started successfully 说明启动成功。

访问控制台

浏览器访问:http://localhost:8848/nacos

- 用户名:

nacos - 密码:

nacos

安装Redis

下载镜像

docker pull redis:6.0.8

启动容器

docker run -d --name redis -p 6379:6379 -e "requirepass=qaz123" redis:6.0.8

安装Zookeeper

下载镜像

docker search zookeeper

docker pull zookeeper

配置文件

新建文件夹

mkdir -p /usr/local/zookeeper/conf

将zookeeper的配置文件放入此文件夹内

配置文件 文件名zoo.cfg

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/data

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

## Metrics Providers

#

# https://prometheus.io Metrics Exporter

#metricsProvider.className=org.apache.zookeeper.metrics.prometheus.PrometheusMetricsProvider

#metricsProvider.httpPort=7000

#metricsProvider.exportJvmInfo=true

创建网络

让 zookeeper 和 kafka 容器可以通信

docker network create kafka-net

启动容器

docker run -d \

--name zookeeper \

--network kafka-net \

--privileged=true \

-p 2181:2181 \

--restart=always \

-v /usr/local/zookeeper/data:/data \

-v /usr/local/zookeeper/conf:/conf \

-v /usr/local/zookeeper/logs:/datalog \

zookeeper

安装Kafka

下载镜像

docker search wurstmeister/kafka

docker pull wurstmeister/kafka

启动容器

docker run -d --name kafka \

--network kafka-net \

-p 9092:9092 \

-e KAFKA_BROKER_ID=0 \

-e KAFKA_ZOOKEEPER_CONNECT=zookeeper:2181 \

-e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://本地ip:9092 \

-e KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092 wurstmeister/kafka

- -e KAFKA_ADVERTISED_LISTENERS Kafka 的一个环境变量,用于指定 Kafka 将如何通告客户端它在外部可访问的地址。如果是云服务器,则得是公网ip,因为客户端连接的kafka地址要和这个一样

安装ElasticSearch

为了和后续的Kibana相通信,需要先用 docker 创建一个网络

建立容器间的通信

docker network create elk

下载镜像

docker pull elasticsearch:8.5.2

启动容器

docker run -d --name es \

--net elk \

-p 9200:9200 \

-p 9300:9300 \

-e "discovery.type=single-node" \

-e ES_JAVA_OPTS="-Xms768m -Xmx768m" \

-e "xpack.security.http.ssl.enabled=false" \

-e "xpack.security.enrollment.enabled=true" \

elasticsearch:8.5.2

- -p (小写)映射端口号,主机端口:容器端口

- -P(大写)随机为容器指定端口号

- –name 指定容器别名

- –net 连接指定网络

- -e 指定启动容器时的环境变量

- -d 后台运行容器

- xpack.security.http.ssl.enabled=false 关闭https访问

- xpack.security.enrollment.enabled=true 设置为true,可设置密码

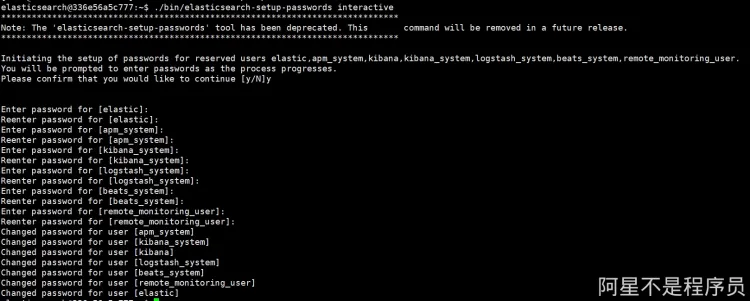

设置密码

# 进入容器

docker exec -it es /bin/bash

# 执行命令配置密码

./bin/elasticsearch-setup-passwords interactive

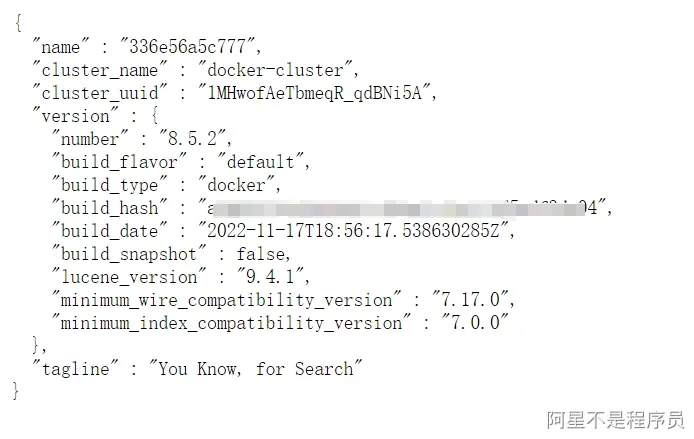

设置成功后,输入地址 ip:9200,登入密码看到如下页面说明安装成功

安装IK分词器

IK分词器是Elasticsearch中常用的中文分词插件,大麦项目搜索功能需要用到

进入容器安装插件

# 进入ES容器

docker exec -it es /bin/bash

# 使用插件管理器安装IK分词器(版本需与ES版本一致)

./bin/elasticsearch-plugin install https://get.infini.cloud/elasticsearch/analysis-ik/8.5.2

# 退出容器

exit

重启容器使插件生效

docker restart es

验证安装

# 查看已安装的插件

docker exec -it es ./bin/elasticsearch-plugin list

能看到 analysis-ik 说明安装成功

测试分词效果

# 测试ik_smart分词(粗粒度,适合搜索)

curl -X POST "localhost:9200/_analyze" -H 'Content-Type: application/json' -d'

{

"analyzer": "ik_smart",

"text": "中华人民共和国"

}'

# 测试ik_max_word分词(细粒度,适合索引)

curl -X POST "localhost:9200/_analyze" -H 'Content-Type: application/json' -d'

{

"analyzer": "ik_max_word",

"text": "中华人民共和国"

}'

安装Kibana

这里Kibana的版本最好和ElasticSearch的版本一致

下载镜像

docker pull kibana:8.5.2

启动容器

docker run -d --name kibana \

--net elk \

-p 5601:5601 \

-e ELASTICSEARCH_HOSTS=http://es:9200 \

-e ELASTICSEARCH_USERNAME="kibana_system" \

-e ELASTICSEARCH_PASSWORD="密码" \

-e "I18N_LOCALE=zh-CN" \

kibana:8.5.2

- -p 5601:5601 映射端口号

- -e ELASTICSEARCH_HOSTS=http://es:9200 指定ElasticSearch地址,docker可以直接用容器名访问

- -e ELASTICSEARCH_USERNAME="kibana_system" 指定ElasticSearch账户

- -e ELASTICSEARCH_PASSWORD="密码" 指定ElasticSearch账户密码

- I18N_LOCALE=zh-CN 开启 kibana 的汉化

启动kibana后,输入地址 ip:5601,出现如下页面说明启动成功

安装Logstash(如果不需要在es中查看日志,不安装也可以,并不影响项目启动)

这里Logstash的版本和ElasticSearch、Kibana保持一致,都是8.5.2

下载镜像

docker pull logstash:8.5.2

配置文件准备

创建配置目录

Linux / macOS

mkdir -p ~/logstash/config

mkdir -p ~/logstash/pipeline

Windows (PowerShell)

New-Item -Path "$HOME\logstash\config" -ItemType Directory -Force

New-Item -Path "$HOME\logstash\pipeline" -ItemType Directory -Force

创建 logstash.yml 配置文件

Linux / macOS

cat > ~/logstash/config/logstash.yml << 'EOF'

http.host: "0.0.0.0"

xpack.monitoring.elasticsearch.hosts: [ "http://es:9200" ]

xpack.monitoring.elasticsearch.username: "elastic"

xpack.monitoring.elasticsearch.password: "elastic"

EOF

Windows (PowerShell)

@"

http.host: "0.0.0.0"

xpack.monitoring.elasticsearch.hosts: [ "http://es:9200" ]

xpack.monitoring.elasticsearch.username: "elastic"

xpack.monitoring.elasticsearch.password: "elastic"

"@ | Out-File -FilePath "$HOME\logstash\config\logstash.yml" -Encoding UTF8

创建 Pipeline 配置文件

Linux / macOS

cat > ~/logstash/pipeline/logstash.conf << 'EOF'

input {

tcp {

port => 5047

codec => json {

charset => "UTF-8"

}

}

}

filter {

# 时间戳转换:将 timeMillis 转为 Elasticsearch 的 @timestamp

date {

match => ["timeMillis", "UNIX_MS"]

target => "@timestamp"

}

# 清理不需要的字段

mutate {

remove_field => ["instant", "endOfBatch", "loggerFqcn", "threadId", "threadPriority"]

}

# 如果有 source 字段,把它展平方便查询(可选)

if [source] {

mutate {

add_field => {

"sourceClass" => "%{[source][class]}"

"sourceMethod" => "%{[source][method]}"

"sourceFile" => "%{[source][file]}"

"sourceLine" => "%{[source][line]}"

}

}

}

}

output {

elasticsearch {

hosts => ["http://es:9200"]

index => "damai-logs-%{+YYYY.MM.dd}"

user => "elastic"

password => "elastic"

}

# 调试用,输出到控制台

stdout {

codec => rubydebug

}

}

EOF

Windows (PowerShell)

@"

input {

tcp {

port => 5047

codec => json {

charset => ""UTF-8""

}

}

}

filter {

date {

match => [""timeMillis"", ""UNIX_MS""]

target => ""@timestamp""

}

mutate {

remove_field => [""instant"", ""endOfBatch"", ""loggerFqcn"", ""threadId"", ""threadPriority""]

}

if [source] {

mutate {

add_field => {

""sourceClass"" => ""%%{[source][class]}""

""sourceMethod"" => ""%%{[source][method]}""

""sourceFile"" => ""%%{[source][file]}""

""sourceLine"" => ""%%{[source][line]}""

}

}

}

}

output {

elasticsearch {

hosts => [""http://es:9200""]

index => ""damai-logs-%%{+YYYY.MM.dd}""

user => ""elastic""

password => ""elastic""

}

stdout {

codec => rubydebug

}

}

"@ | Out-File -FilePath "$HOME\logstash\pipeline\logstash.conf" -Encoding UTF8

Pipeline 配置说明:

- Input: 监听 TCP 5047 端口,按 JSON 格式接收日志

- Filter: 转换时间戳、清理不需要的字段、展平 source 字段方便查询

- Output: 输出到 Elasticsearch,索引命名为

damai-logs-项目名-日期

启动容器

Linux / macOS

docker run -d \

--name logstash \

--net elk \

-p 5047:5047 \

-v ~/logstash/config/logstash.yml:/usr/share/logstash/config/logstash.yml \

-v ~/logstash/pipeline:/usr/share/logstash/pipeline \

-e "xpack.monitoring.enabled=true" \

logstash:8.5.2

Windows (PowerShell)

docker run -d `

--name logstash `

--net elk `

-p 5047:5047 `

-v "$HOME\logstash\config\logstash.yml:/usr/share/logstash/config/logstash.yml" `

-v "$HOME\logstash\pipeline:/usr/share/logstash/pipeline" `

-e "xpack.monitoring.enabled=true" `

logstash:8.5.2

- --net elk 加入 elk 网络,与 Elasticsearch 和 Kibana 通信

- -p 5047:5047 端口映射,接收应用发来的日志

- -v 挂载配置文件

验证部署

查看启动日志:

docker logs -f logstash

启动成功后会看到:

[INFO ] logstash.agent - Pipelines running

[INFO ] logstash.inputs.tcp - Starting tcp input listener {:address=>"0.0.0.0:5047"}

查看 Elasticsearch 索引:

curl http://localhost:9200/_cat/indices?v

示例输出:

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

yellow open damai-logs-user-service-2026.01.07 D8LU-HunTDOt0Jm2hRcjAw 1 1 154 0 281.3kb 281.3kb

yellow 状态是正常的,因为是单节点模式,副本无法分配。

应用集成配置

在大麦项目的 log4j2.xml 已经做了集成,默认是注释了,使用的话把注释放开就可以了

在 Kibana 中查看日志

- 浏览器打开 Kibana:

http://localhost:5601 - 登录后,点击左侧菜单 "Management" → "Stack Management"

- 点击 "Index Patterns" → "Create index pattern"

- 输入索引模式:

damai-logs-* - 选择时间字段,点击 "Create index pattern"

- 点击左侧菜单 "Discover" 就可以看到实时日志了